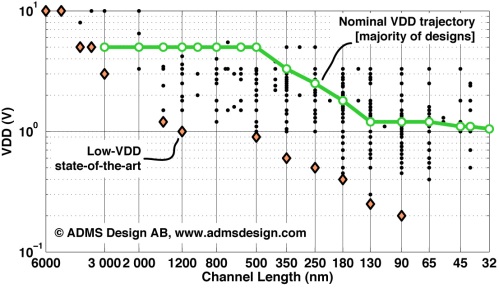

Fig. 1. Supply voltages used for scientifically reported CMOS ADCs over time. Data points representing the evolution of low-voltage state-of-the-art have been highlighted. Trend line fit to 1985-2007 data.

LOW-VOLTAGE EVOLUTION TRENDS: In part 1 of the low-voltage ADC series of posts, we observed the trends for supply voltage (VDD) vs. process scaling (L). In this second post we will complete the picture by looking at VDD trends over time. The timing for introduction of new process technology, and the nominal supply voltage for future nodes, are reasonably well-defined through the continuously updated International Technology Roadmap for Semiconductors (ITRS) [1], but at least two ADC-related aspects are not controlled by the ITRS scaling roadmap:

- The rate at which mainstream ADC research activities will migrate to newer CMOS technology.

- To what extent the ADC research community will attempt to push the envelope with respect to ultra-low voltage operation.

Regarding the former, it was observed in a previous post that the number of early adopters for each node is very small. In any year, the absolute majority of experimental ADCs have so far been implemented in technology being 2–5 generations behind the scaling front. How the “mainstream” will behave in the future is next to impossible to predict, as it is influenced by future industrial needs, research grant policies, research community group dynamics, journal and conference publication targets, as well as many other hard and soft parameters of which we know very little today.

The latter depends on a handful of pioneers choosing to explore the outer limits of ultra-low voltage ADC operation. It was seen in part 1 that there have been rather few attempts to push in this direction, which reveals that only a few groups have historically chosen this focus. If no one decides to have a shot at the current world record – the 0.2 V, VCO-based ∑-∆ modulator presented by Wismar et al. in [2] – we may never see it nudged.

It is therefore very difficult to predict the future VDD trends for analog-to-digital converters, both with respect to the ultra-low voltage state-of-the-art, and the mainstream supply voltage. What we can do, however, is to observe historical trends and use them as a reference.

Observation of ADC supply voltage trends

Figure 1 shows the voltage supplies reported for CMOS A/D-converters reported in scientific publications until Q1-2012. The graph shows the highest supply voltage applied to the circuit. It means that, if a circuit used several independent supplies, then VDD = max(VDD1, VDD2, …, VDDn), so that true low-voltage operation is promoted. The evolution of low-voltage state-of-the-art has been highlighted.

A similar graph in [3] shows data for all ADCs (CMOS, as well as bipolar and BiCMOS) but with data only to Q1-2010. Focusing on CMOS, and adding two more years of empirical data yields a different scatter. Nevertheless, the low-voltage state-of-the-art sequence here is nearly identical to that in [3] because the global state-of-the-art almost completely coincides with CMOS ADCs, and also did not improve since 2006. As observed in [3], the lowest reported VDD remained unchanged at 5 V until 1985, after which it started to follow a noisy but distinct scaling trend for 20 years. Fitting to the state-of-the-art data from 1985–2007, yields that the lowest reported VDD was scaled by ~2× every five years during this period.

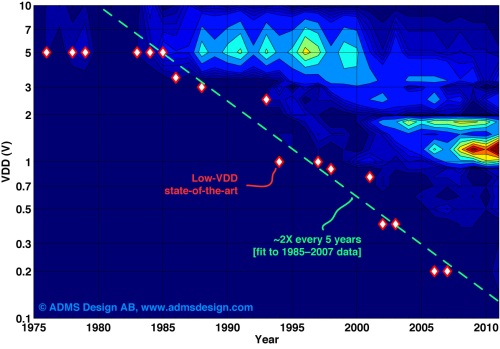

Figure 2 shows the distribution of scientific ADC implementations over supply voltage and publication year as a contour plot. The state-of-the-art data points and trend fit from Fig. 1 have been superimposed for reference. Just as in part 1, manually selected bin centers and non-linear contour levels have been used in order to render a meaningful and readable (but simplified) plot. The main purpose is to illustrate the difference between mainstream VDD and state-of-the-art low-voltage operation each year. It is observed that:

- Mainstream focus remained at 5 V for over 20 years.

- The state-of-the-art VDD scaling front started to go below 5 V around 12–15 years before any noticeable change in the mainstream focus.

- The low-voltage scaling front appears to be approximately 5–6× below, and 10–15 years ahead of the mainstream VDD for each year.

- Supply voltages from 5 V and down seem to have an extremely long lifetime in publications.

What do you observe?

Figure 2. Voltage supplies used for scientific ADCs over time. Color represents number of publications. The low-voltage state-of-the-art data points are superimposed along with a scaling trend estimated from 1985–2007 data.

Future VDD scaling for ADCs

I’m very aware that there are good reasons why ultra-low VDD scaling may not be able go much further, so please note that I’m not saying here that it will. Perhaps it is physically impossible, or functionally meaningless to go significantly further than the 200 mV operation achieved by Wismar, et al. On the other hand, I’m old enough to have heard one “hard” limit after another being suggested for MOST scaling, and we’re still scaling them. So, let’s just see where we would end up if it should turn out to be possible also for the voltage supply:

If the current trend for ultra-low voltage ADCs should be maintained, the low-voltage pioneers would have to publish ADCs according to the following approximate schedule:

| Year | VDD |

|---|---|

| 2015 | 73 mV |

| 2020 | 36 mV |

| 2025 | 18 mV |

Again, I’m not saying that it will happen. But I still found it interesting to see what kind of supplies the historical trend is projecting towards. Does anyone dare to predict a hard limit for A/D-converter supply voltage? Do you believe we will ever see an ADC operating at 73 mV? Is 36 mV impossible? What are the possibilities in context of the impossibilities?

In case anyone wish to make their own projections, the trend fit expression is:

See also …

ADC performance evolution: Low-voltage operation – part 1

ADC research trends: CMOS node adoption

ADC research trends: Migration to CMOS

References

[1] International Technology Roadmap for Semiconductors (ITRS), 2011 Edition [Online]. Available: http://www.itrs.net

[2] U. Wismar, D. Wisland, and P. Andreani, “A 0.2V 0.44µW 20 kHz analog to digital ∑∆ modulator with 57 fJ/conversion FoM”, Proc. of Eur. Solid-State Circ. Conf. (ESSCIRC), Montreux, Switzerland, pp. 187-190, Sept., 2006.

[3] B. E. Jonsson, “A survey of A/D-converter performance evolution,” Proc. of IEEE Int. Conf. Electronics Circ. Syst. (ICECS), Athens, Greece, pp. 768–771, Dec., 2010.